In this blog we shall see

- Containers and Persistent Storage

- Terminology and background

- Our approach

- Setting up

- Gluster and iSCSI target

- iSCSI Initiator

- Docker host and Container

- Conclusion

- References

Containers and Persistent Storage

As we all know containers are stateless entities which are used to deploy applications and hence need persistent storage to store application data for availability across container incarnations.

Persistent storage in containers are of two types, shared and non-shared.

Shared storage:

Consider this as a volume/store where multiple Containers perform both read and write operations on the same data. Useful for applications like web servers that need to serve the same data from multiple container instances.

Non Shared Storage:

Only a single container can perform write operations to this store at a given time.

This blog will explain about Non Shared Storage for containers using gluster.

Terminology and background

Gluster is a well known scale-out distributed storage system, flexible in its design and to use. One of its key goals is to provide high availability of data. Despite its distributed nature, Gluster is very easy to set up and use. Addition and removal of storage servers from a Gluster cluster is very easy. These capabilities along with other data services that Gluster provides makes it a very nice software defined storage platform.

We can access glusterfs via FUSE module. However to perform a single filesystem operation various context switches are required which leads to performance issues. Libgfapi is a userspace library for accessing data in Glusterfs. It can perform IO on gluster volumes without the FUSE module, kernel VFS layer and hence requires no context switches. It exposes a filesystem like API for accessing gluster volumes. Samba, NFS-Ganesha, QEMU and now the tcmu-runner all use libgfapi to integrate with Glusterfs.

The SCSI subsystem uses a sort of client-server model. The Client/Initiator request IO happen through target which is a storage device. The SCSI target subsystem enables a computer node to behave as a SCSI storage device, responding to storage requests by other SCSI initiator nodes.

In simple terms SCSI is a set of standards for physically connecting and transferring data between computers and peripheral devices.

The most common implementation of the SCSI target subsystem is an iSCSI server, iSCSI transports block level data between the iSCSI initiator and the target which resides on the actual storage device. iSCSi protocol wraps up the SCSI commands and sends it over TCP/IP layer. Up on receiving the packets at the other end it disassembles them to form the same SCSI commands, hence on the OS’es it seen as local SCSI device.

In other words iSCSI is SCSI over TCP/IP.

The LIO project began with the iSCSI design as its core objective, and created a generic SCSI target subsystem to support iSCSI. LIO is the SCSI target in the Linux kernel. It is entirely kernel code, and allows exported SCSI logical units (LUNs) to be backed by regular files or block devices.

TCM is another name for LIO, an in-kernel iSCSI target (server). As we know existing TCM targets run in the kernel. TCMU (TCM in Userspace) allows userspace programs to be written which act as iSCSI targets. These enables wider variety of backstores without kernel code. Hence the TCMU userspace-passthrough backstore allows a userspace process to handle requests to a LUN.

One such backstore with best clustered network storage capabilities is GlusterFS

Tcmu-runner utilizes the TCMU framework handling the messy details of the TCMU interface, allows target in the glusterfs volume to be used over gluster’s libgfapi interface.

TargetCli is the general management platform for the LIO/TCM/TCMU. TargetCli with its shell interface is used to configure LIO.

Our Approach

With all the background discussed above now I shall jump into actual essence of this blog and explain how we can expose the file in gluster volume as a nonshared persistant storage in Docker

We use iSCSI which are highly sensitive to network performance, jitters in the connections will cause iSCSI connection to perform poorly.

Also we all know that container suffer from suboptimal network performance, because of the fact that Docker NAT doesn’t deliver good performance.

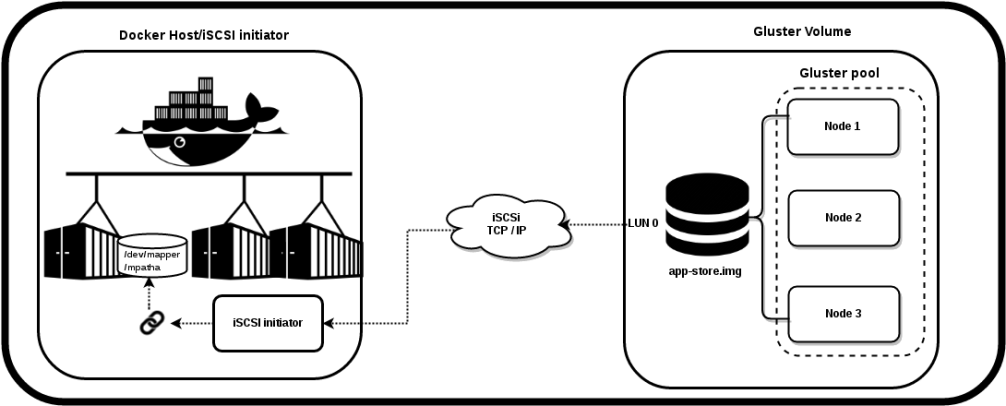

Hence in our approach Docker initiates the iSCSI session, attaches iSCSI target as block device with multipath enabled, mounts it in local directory, and share that via bind mount to the container. This approach doesn’t need Docker NAT (hence we don’t lose performance).

Now without any delay let me walk through the setup details…

Setting Up

You need 4 nodes for setting this up, 3 acts as gluster nodes where the iSCSI target is served from and 1 machine as a iSCSI Initiator/Docker Host where container deployment happens.

Gluster and iSCSI target Setup

On all the nodes I have Installed freshly baked fedora 24 Beta server.

Perform below on all the 3 gluster nodes:

# dnf upgrade

# dnf install glusterfs-server

got 3.8.0-0.2.rc2.fc24.x86_64

# iptables -F

# vi /etc/glusterfs/glusterd.vol

add 'option rpc-auth-allow-insecure on' this is needed by libgfapi

# systemctl start glusterd

On Node1 perform below:

# form a gluster trusted pool of nodes [root@Node1 ~]# gluster peer probe Node2 [root@Node1 ~]# gluster peer probe Node3 [root@Node1 ~]# gluster pool list UUID Hostname State 51023fac-edc7-4149-9e3c-6b8207a02f7e Node1 *Connected ed9af9d6-21f0-4a37-be2c-5c23eff4497e Node2 Connected 052e681e-cdc8-4d3b-87a4-39de26371b0f Node3 Connected ` # create volume [root@Node1 ~]# gluster vol create nonshared-store replica 3 Node1:/subvol1 Node2:/subvol2 Node3:/subvol3 force # volume set to allow insecure port ranges [root@Node1 ~]# gluster vol set nonshared-store server.allow-insecure on # start the volume [root@Node1 ~]# gluster vol start nonshared-store [root@Node1 ~]# gluster vol status check the status # mount volume and create a required target file of 8G in volume [root@Node1 ~]# mount -t glusterfs Node1:/nonshared-store /mnt [root@Node1 ~]# cd /mnt [root@Node1 ~]# fallocate -l 8G app-store.img # finally unmount the volume [root@Node1 ~]# umount /mnt

Now we have done with the gluster setup, lets expose file ‘app-store.img’ as an iSCSI target by creating a LUN

Again On Node1:

[root@Node1 ~]# dnf install tcmu-runner targetcli

# enter the admin console

[root@Node1 ~]# targetcli

targetcli shell version 2.1.fb42

Copyright 2011-2013 by Datera, Inc and others.

For help on commands, type 'help'.

/> ls

o- / ...................................................... [...]

o- backstores ........................................... [...]

| o- block ............................... [Storage Objects: 0]

| o- fileio .............................. [Storage Objects: 0]

| o- pscsi ............................... [Storage Objects: 0]

| o- ramdisk ............................. [Storage Objects: 0]

| o- user:glfs ........................... [Storage Objects: 0]

| o- user:qcow ........................... [Storage Objects: 0]

o- iscsi ......................................... [Targets: 0]

o- loopback ...................................... [Targets: 0]

o- vhost ......................................... [Targets: 0]

# Create a Image file with the name "glfsLUN" on gluster volume with 8G

/> cd /backstores/user:glfs

/backstores/user:glfs> create glfsLUN 8G nonshared-store@Node1/app-store.img

Created user-backed storage object glfsLUN size 8589934592.

# create a target

/backstores/user:glfs> cd /iscsi/

/iscsi> create iqn.2016-06.org.gluster:Node1

Created target iqn.2016-06.org.gluster:Node1.

Created TPG 1.

Global pref auto_add_default_portal=true

Created default portal listening on all IPs (0.0.0.0), port 3260.

# set LUN

/iscsi> cd /iscsi/iqn.2016-06.org.gluster:Node1/tpg1/luns

iscsi/iqn.20...de1/tpg1/luns> create /backstores/user:glfs/glfsLUN 0

Created LUN 0.

iscsi/iqn.20...de1/tpg1/luns> cd /

# set ACL (it's the IQN of an initiator you permit to connect)

# Copy InitiatorName from Docker host machine

# which will be in ‘/etc/iscsi/initiatorname.iscsi’

# In my case it is the one put in bold at next commands

/> cd /iscsi/iqn.2016-06.org.gluster:Node1/tpg1/acls

/iscsi/iqn.20...de1/tpg1/acls> create iqn.1994-05.com.redhat:8277148d16b2

Created Node ACL for iqn.1994-05.com.redhat:8277148d16b2

Created mapped LUN 0.

# set UserID and password for authentication

/iscsi/iqn.20...de1/tpg1/acls> cd iqn.1994-05.com.redhat:8277148d16b2

/iscsi/iqn.20...:8277148d16b2> set auth userid=username

Parameter userid is now 'username'.

/iscsi/iqn.20...:8277148d16b2> set auth password=password

Parameter password is now 'password'.

/iscsi/iqn.20...:8277148d16b2> cd /

/> saveconfig

Last 10 configs saved in /etc/target/backup.

Configuration saved to /etc/target/saveconfig.json

/> exit

Global pref auto_save_on_exit=true

Last 10 configs saved in /etc/target/backup.

Configuration saved to /etc/target/saveconfig.json

Multipathing will help in achieving high availability of the LUN at client side, so even when a node is down for maintenance your LUN remains accessible.

Using the same wwn across the nodes will help enable multipathing, we need to ensure the LUN exported by all the three gateways/nodes to share the same wwn – if they don’t match, the client will see three devices, not three paths to the same device.

Copy the wwn from the Node1, the one shown in bold below:

[root@Node1 ~]# cat /etc/target/saveconfig.json

{

"fabric_modules": [],

"storage_objects": [

{

"config": "glfs/nonshared-store@Node1/app-store.img",

"name": "glfsLUN",

"plugin": "user",

"size": 8589934592,

"wwn": "cdc1e292-c21a-41ce-aa3f-d49658633bdf"

}

],

"targets": [

[...]

Now On Node2:

[root@Node2 ~]# dnf install tcmu-runner targetcli # enter the admin console [root@Node2 ~]# targetcli targetcli shell version 2.1.fb42 Copyright 2011-2013 by Datera, Inc and others. For help on commands, type 'help'. /> ls o- / ...................................................... [...] o- backstores ........................................... [...] | o- block ............................... [Storage Objects: 0] | o- fileio .............................. [Storage Objects: 0] | o- pscsi ............................... [Storage Objects: 0] | o- ramdisk ............................. [Storage Objects: 0] | o- user:glfs ........................... [Storage Objects: 0] | o- user:qcow ........................... [Storage Objects: 0] o- iscsi ......................................... [Targets: 0] o- loopback ...................................... [Targets: 0] o- vhost ......................................... [Targets: 0] # create a Image file with the name "glfsLUN" on gluster volume with 8G /> /backstores/user:glfs create glfsLUN 8G nonshared-store@Node2/app-store.img Created user-backed storage object glfsLUN size 8589934592. /> saveconfig Last 10 configs saved in /etc/target/backup. Configuration saved to /etc/target/saveconfig.json /> exit Global pref auto_save_on_exit=true Last 10 configs saved in /etc/target/backup. Configuration saved to /etc/target/saveconfig.json [root@Node2 ~]# vi /etc/target/saveconfig.json edit wwn to point to one that is copied from Node1 and save [root@Node2 ~]# systemctl restart target [root@Node2 ~]# targetcli targetcli shell version 2.1.fb42 Copyright 2011-2013 by Datera, Inc and others. For help on commands, type 'help'. # create a target /> /iscsi/ create iqn.2016-06.org.gluster:Node2 Created target iqn.2016-06.org.gluster:Node2. Created TPG 1. Global pref auto_add_default_portal=true Created default portal listening on all IPs (0.0.0.0), port 3260. # set LUN /> cd /iscsi/iqn.2016-06.org.gluster:Node2/tpg1/luns iscsi/iqn.20...de2/tpg1/luns> create /backstores/user:glfs/glfsLUN 0 Created LUN 0. iscsi/iqn.20...de2/tpg1/luns> cd / # set ACL (it's the IQN of an initiator you permit to connect) # Copy InitiatorName from Docker host machine # which will be in ‘/etc/iscsi/initiatorname.iscsi’ # In my case it is the one put in bold at next commands /> cd /iscsi/iqn.2016-06.org.gluster:Node2/tpg1/acls /iscsi/iqn.20...de2/tpg1/acls> create iqn.1994-05.com.redhat:8277148d16b2 Created Node ACL for iqn.1994-05.com.redhat:8277148d16b2 Created mapped LUN 0. # set UserID and password for authentication /iscsi/iqn.20...de2/tpg1/acls> cd iqn.1994-05.com.redhat:8277148d16b2 /iscsi/iqn.20...:8277148d16b2> set auth userid=username Parameter userid is now 'username'. /iscsi/iqn.20...:8277148d16b2> set auth password=password Parameter password is now 'password'. /iscsi/iqn.20...:8277148d16b2> cd / /> saveconfig Last 10 configs saved in /etc/target/backup. Configuration saved to /etc/target/saveconfig.json /> exit Global pref auto_save_on_exit=true Last 10 configs saved in /etc/target/backup. Configuration saved to /etc/target/saveconfig.json

Note: Replicate Node2 setup on Node3 as well, avoiding the duplication

Setting up iSCSI initiator

On the fourth Machine:

[root@DkNode ~]# dnf install iscsi-initiator-utils sg3_utils # Multipathing to achieve high availability [root@DkNode ~]# mpathconf multipath is enabled find_multipaths is enabled user_friendly_names is enabled dm_multipath module is not loaded multipathd is not running [root@DkNode ~]# modprobe dm_multipath [root@DkNode ~]# lsmod | grep dm_multipath dm_multipath 24576 0 [root@DkNode ~]# cat /etc/multipath.conf cat: /etc/multipath.conf: No such file or directory [root@DkNode ~]# mpathconf --enable [root@DkNode ~]# cat >> /etc/multipath.conf # LIO iSCSI devices { device { vendor "LIO-ORG" user_friendly_names "yes" # names like mpatha path_grouping_policy "failover" # one path per group path_selector "round-robin 0" path_checker "tur" prio "const" rr_weight "uniform" } } ^C [root@DkNode ~]# systemctl start multipathd [root@DkNode ~]# mpathconf multipath is enabled find_multipaths is enabled user_friendly_names is enabled dm_multipath module is loaded multipathd is running [root@DkNode ~]# vi /etc/iscsi/iscsid.conf # uncomment below line node.session.auth.authmethod = CHAP [...] # uncomment and edit username and password if required node.session.auth.username = username node.session.auth.password = password [root@DkNode ~]# systemctl restart iscsid [root@DkNode ~]# systemctl enable iscsid # One way of Login to the iSCSI target [root@DkNode ~]# iscsiadm -m discovery -t st -p Node1 -l Node1:3260,1 iqn.2016-06.org.gluster:Node1 Logging in to [iface: default, target: iqn.2016-06.org.gluster:Node1, portal: Node1,3260] (multiple) Login to [iface: default, target: iqn.2016-06.org.gluster:Node1, portal: Node1,3260] successful. [root@DkNode ~]# iscsiadm -m discovery -t st -p Node2 -l Node2:3260,1 iqn.2016-06.org.gluster:Node2 Logging in to [iface: default, target: iqn.2016-06.org.gluster:Node2, portal: Node2,3260] (multiple) Login to [iface: default, target: iqn.2016-06.org.gluster:Node2, portal: Node2,3260] successful. [root@DkNode ~]# iscsiadm -m discovery -t st -p Node3 -l Node3:3260,1 iqn.2016-06.org.gluster:Node3 Logging in to [iface: default, target: iqn.2016-06.org.gluster:Node3, portal: Node3,3260] (multiple) Login to [iface: default, target: iqn.2016-06.org.gluster:Node3, portal: Node3,3260] successful. # Here you can see three paths to the same device [root@DkNode ~]# multipath -ll mpatha (36001405cdc1e292c21a41ceaa3fd4965) dm-2 LIO-ORG ,TCMU device size=8.0G features='0' hwhandler='0' wp=rw `-+- policy='queue-length 0' prio=1 status=active |- 2:0:0:0 sda 8:0 active ready running |- 3:0:0:0 sdb 8:16 active ready running `- 4:0:0:0 sdc 8:32 active ready running [root@DkNode ~]# lsblk NAME MAJ:MIN RM SIZE RO TYPE MOUNTPOINT sda 8:0 0 8G 0 disk └─mpatha 253:2 0 8G 0 mpath sdb 8:16 0 8G 0 disk └─mpatha 253:2 0 8G 0 mpath sdc 8:32 0 8G 0 disk └─mpatha 253:2 0 8G 0 mpath [...]

Some ways to check multipathing is working as expected

# From sda, sdb, sdc check wwn's are same and Nodes are as expected [root@DkNode ~]# sg_inq -i /dev/sda VPD INQUIRY: Device Identification page Designation descriptor number 1, descriptor length: 49 designator_type: T10 vendor identification, code_set: ASCII associated with the addressed logical unit vendor id: LIO-ORG vendor specific: cdc1e292-c21a-41ce-aa3f-d49658633bdf Designation descriptor number 2, descriptor length: 20 designator_type: NAA, code_set: Binary associated with the addressed logical unit NAA 6, IEEE Company_id: 0x1405 Vendor Specific Identifier: 0xcdc1e292c Vendor Specific Identifier Extension: 0x21a41ceaa3fd4965 [0x6001405cdc1e292c21a41ceaa3fd4965] Designation descriptor number 3, descriptor length: 51 designator_type: vendor specific [0x0], code_set: ASCII associated with the addressed logical unit vendor specific: glfs/nonshared-store@Node1/app-store.img [root@DkNode ~]# sg_inq -i /dev/sdb VPD INQUIRY: Device Identification page Designation descriptor number 1, descriptor length: 49 designator_type: T10 vendor identification, code_set: ASCII associated with the addressed logical unit vendor id: LIO-ORG vendor specific: cdc1e292-c21a-41ce-aa3f-d49658633bdf Designation descriptor number 2, descriptor length: 20 designator_type: NAA, code_set: Binary associated with the addressed logical unit NAA 6, IEEE Company_id: 0x1405 Vendor Specific Identifier: 0xcdc1e292c Vendor Specific Identifier Extension: 0x21a41ceaa3fd4965 [0x6001405cdc1e292c21a41ceaa3fd4965] Designation descriptor number 3, descriptor length: 52 designator_type: vendor specific [0x0], code_set: ASCII associated with the addressed logical unit vendor specific: glfs/nonshared-store@Node2/app-store.img [root@DkNode ~]# sg_inq -i /dev/sdc VPD INQUIRY: Device Identification page Designation descriptor number 1, descriptor length: 49 designator_type: T10 vendor identification, code_set: ASCII associated with the addressed logical unit vendor id: LIO-ORG vendor specific: cdc1e292-c21a-41ce-aa3f-d49658633bdf Designation descriptor number 2, descriptor length: 20 designator_type: NAA, code_set: Binary associated with the addressed logical unit NAA 6, IEEE Company_id: 0x1405 Vendor Specific Identifier: 0xcdc1e292c Vendor Specific Identifier Extension: 0x21a41ceaa3fd4965 [0x6001405cdc1e292c21a41ceaa3fd4965] Designation descriptor number 3, descriptor length: 51 designator_type: vendor specific [0x0], code_set: ASCII associated with the addressed logical unit vendor specific: glfs/nonshared-store@Node3/app-store.img [root@DkNode ~]# ls -l /dev/mapper/ total 0 [...] lrwxrwxrwx. 1 root root 7 Jun 21 12:13 mpatha -> ../dm-2 [root@DkNode ~]# sg_inq -i /dev/mapper/mpatha VPD INQUIRY: Device Identification page Designation descriptor number 1, descriptor length: 49 designator_type: T10 vendor identification, code_set: ASCII associated with the addressed logical unit vendor id: LIO-ORG vendor specific: cdc1e292-c21a-41ce-aa3f-d49658633bdf Designation descriptor number 2, descriptor length: 20 designator_type: NAA, code_set: Binary associated with the addressed logical unit NAA 6, IEEE Company_id: 0x1405 Vendor Specific Identifier: 0xcdc1e292c Vendor Specific Identifier Extension: 0x21a41ceaa3fd4965 [0x6001405cdc1e292c21a41ceaa3fd4965] Designation descriptor number 3, descriptor length: 51 designator_type: vendor specific [0x0], code_set: ASCII associated with the addressed logical unit vendor specific: glfs/nonshared-store@Node3/app-store.img

Here we partition the target device and mount it local to iSCSI initiator/Docker Host

# partition the disk file [root@DkNode ~]# sgdisk -n 1:2048 /dev/mapper/mpatha Creating new GPT entries. Warning: The kernel is still using the old partition table. The new table will be used at the next reboot or after you run partprobe(8) or kpartx(8) The operation has completed successfully. # now commit the changes to disk [root@DkNode ~]# partprobe /dev/mapper/mpatha # check if that appeared as expected [root@DkNode ~]# lsblk NAME MAJ:MIN RM SIZE RO TYPE MOUNTPOINT sda 8:0 0 8G 0 disk └─mpatha 253:2 0 8G 0 mpath └─mpatha1 253:3 0 8G 0 part sdb 8:16 0 8G 0 disk └─mpatha 253:2 0 8G 0 mpath └─mpatha1 253:3 0 8G 0 part sdc 8:32 0 8G 0 disk └─mpatha 253:2 0 8G 0 mpath └─mpatha1 253:3 0 8G 0 part [...] [root@DkNode ~]# sgdisk -p /dev/mapper/mpatha Disk /dev/mapper/mpatha: 16777216 sectors, 8.0 GiB Logical sector size: 512 bytes Disk identifier (GUID): 71183D83-4290-41F4-8EF9-69B3D14495F8 Partition table holds up to 128 entries First usable sector is 34, last usable sector is 16777182 Partitions will be aligned on 2048-sector boundaries Total free space is 2014 sectors (1007.0 KiB) Number Start (sector) End (sector) Size Code Name 1 2048 16777182 8.0 GiB 8300 [root@DkNode ~]# ls -l /dev/mapper/ total 0 [...] lrwxrwxrwx. 1 root root 7 Jun 21 12:23 mpatha -> ../dm-2 lrwxrwxrwx. 1 root root 7 Jun 21 12:23 mpatha1 -> ../dm-3 # format the target device [root@DkNode ~]# mkfs.xfs /dev/mapper/mpatha1 meta-data=/dev/mapper/mpatha1 isize=512 agcount=4, agsize=524223 blks = sectsz=512 attr=2, projid32bit=1 = crc=1 finobt=1, sparse=0 data = bsize=4096 blocks=2096891, imaxpct=25 = sunit=0 swidth=0 blks naming =version 2 bsize=4096 ascii-ci=0 ftype=1 log =internal log bsize=4096 blocks=2560, version=2 = sectsz=512 sunit=0 blks, lazy-count=1 realtime =none extsz=4096 blocks=0, rtextents=0 # mount the iSCSI target [root@DkNode ~]# mkdir /root/nonshared-store/ [root@DkNode ~]# mount /dev/mapper/mpatha1 /root/nonshared-store/ [root@DkNode ~]# df -Th Filesystem Type Size Used Avail Use% Mounted on [...] /dev/mapper/mpatha1 xfs 8.0G 33M 8.0G 1% /root/nonshared-store/

Let me point you, the iSCSI initiator for us will be the Docker host and viz.

Setting up Docker host and container

[root@DkNode ~]# touch /root/nonshared-store/{1..10}

[root@DkNode ~]# ls /root/nonshared-store/

1 10 2 3 4 5 6 7 8 9

[root@DkNode ~]# cat > /etc/yum.repos.d/docker.repo

[dockerrepo]

name=Docker Repository

baseurl=https://yum.dockerproject.org/repo/main/fedora/$releasever/

enabled=1

gpgcheck=1

gpgkey=https://yum.dockerproject.org/gpg

^c

[root@DkNode ~]# dnf install docker-engine

[root@DkNode ~]# systemctl start docker

# create a container

[root@DkNode ~]# docker run --name bindmount -v /root/nonshared-store/:/mnt:z -t -i fedora /bin/bash

--name Assign a name to the container

-v Create a bind mount. If you specify [[HOST-DIR:]CONTAINER-DIR[:OPTIONS]]

Option: z is the selinux label (instead of ':z' you are free to do # setenforce 0)

-t Allocate a pseudo-TTY

-i Keep STDIN open (Interactive)

# docker interactive tty is here for us

[root@5bbb1e4cb8f8 /]# ls /mnt/

1 10 2 3 4 5 6 7 8 9

[root@5bbb1e4cb8f8 /]# df -Th

Filesystem Type Size Used Avail Use% Mounted on

[...]

/dev/mapper/mpatha1 xfs 8.0G 33M 8.0G 1% /mnt

/dev/mapper/fedora_dhcp42--88-root xfs 15G 1.2G 14G 8% /etc/hosts

shm tmpfs 64M 0 64M 0% /dev/sh

Conclusion

This just showcases how Gluster can be used as a distributed block store for containers. More details about high availability, integration with Kubernetes etc. will come by in further posts.

References

http://rootfs.github.io/iSCSI-Kubernetes/

http://blog.gluster.org/2016/04/using-lio-with-gluster/

https://docs.docker.com/engine/tutorials/dockervolumes/http://scst.sourceforge.net/scstvslio.html

http://events.linuxfoundation.org/sites/events/files/slides/tcmu-bobw_0.pdf

https://www.kernel.org/doc/Documentation/target/tcmu-design.txt

https://lwn.net/Articles/424004/

http://www.gluster.org/community/documentation/index.php/GlusterFS_Documentation

I some how unable to connect the use of the 4th node here which you have used for setting up the multi path. Could you elaborate this part a bit?

Hi Atin,

The Node4 servers the purpose of both iSCSI initiator as well as the Docker host.

As iSCSI initiator:

As mentioned, iSCSI works on server and client model, we have exported the LUN’s from 3 gateways/nodes from which target file is served from, and the Node4 is like a client which initiates the iSCSI session and get target block device attached on itself.

As Docker Host:

We have chosen Docker host to fit on the same node because we have the target device attached here, hence we can mount it on same Node4 and run Docker container here with bindmount, as a result we can access the target device from the container.

Cheers,

HTH