Index:

- About Gluster

- Getting some Background about Gluster

- Hyper Converged setups

- About Benchmarking sets

- Benchmarking

- How to create Benchmarking results

- How to visualize the results

- Summary

About Gluster:

Gluster is a famous scale-out distributed file-system, flexible in its design and use, its aim is to provide high availability of data. The Gluster doesn’t use central metadata server which means that there is no bottleneck of single point failure.

The best part with Gluster is its ease of setting up and use, you can add/remove servers as per your convenience, thus maintenance for small or medium or large organizations is very easy. And of-course its all Open-Source.

Requirement for Benchmark:

Alright! to understand this you need some basic idea about GlusterFs networking design, I will try to explain in very simple terminology.

Nodes/Servers:

The number of systems that act as storage servers.

Bricks:

Each server can have 1 or more bricks, for understanding say directories in the server where the real data will be stored.

Volume:

We group all the servers (storage nodes, that contain bricks) as single volume.

Client:

where we mount and access the volume (whole file system)

glusterd:

Its a daemon that runs on all the servers, it manages the client side and server side communication, it will talk to bricks and serve the information to client side process.

glusterd: management daemon runs on all servers with port 24007 glusterfsd: brick process

tcp 0 0 0.0.0.0:49152 0.0.0.0:* LISTEN 22599/glusterfsd tcp 0 0 0.0.0.0:24007 0.0.0.0:* LISTEN 22499/glusterd tcp 0 0 10.70.1.86:24007 198.168.0.1:65534 ESTABLISHED 22499/glusterd tcp 0 0 10.70.1.86:65534 198.186.0.1:24007 ESTABLISHED 22599/glusterfsd Here "198.168.0.1" is my server address, you can notice above there is connection established between glusterd and glusterfsd i.e. management daemon and brick process.

glusterfs: client side processtcp 0 0 10.70.1.86:49152 10.70.1.86:65531 ESTABLISHED 22599/glusterfsd tcp 0 0 10.70.1.86:65533 10.70.1.86:24007 ESTABLISHED 23027/glusterfs tcp 0 0 10.70.1.86:65531 10.70.1.86:49152 ESTABLISHED 23027/glusterfs tcp 0 0 10.70.1.86:24007 10.70.1.86:65533 ESTABLISHED 22499/glusterd

Form above you can draw:

It is glusterd that is talking to the brickprocess and the client for management purposes.

Also there is the ultimate communication between the client process and the brick process for data transfer. This communication is absorbed to be TCP always (till date).

Hyper Converged setups:

Keeping this Gluster concepts aside, we will move to another world of integrated clusters of storage and virtualization.

In general, you already heard about virtual clusters, virtual clusters are are combinations of several virtual Machines (VMs) which are basically from one or more physical clusters. The VMs are interconnected logically by a virtual network across several physical networks. Virtual clusters are formed with physical machines or a VM hosted by multiple physical clusters.

So, virtual cluster is a combination of several VM’s, here this VM is only for computation can also be known as Compute Node.

Back to Gluster! each node used in gluster can be called as Storage Node.

If Compute Node and Storage Node are maintained in the same VM and if such kind of similar nodes are clustered together they can form Hyper-converged setup, this can be achieved very easily with Gluster.

About Benchmarking Sets:

As said in above the currently the communication between and client process and the brick process is tcp, in case of Hyperconvered setups server and client can sit on the same machine, so still the communication between client and brick is tcp loop-back.

I have written a patch that that tries to detect if client and brick are on the same machine, and in case if they are on the same machine it tries to initiate the connection over Unix Domain Sockets (UDS) instead of using tcp loopback connection.

This benchmark is to prove that the improved performance by using this patch by using UDS.

Benchmarking:

Tools used:

Iozone tool for Benchmarking

Iozone results comparator

1.How to create Benchmarking results:

About Iozone:

IOzone is a file system benchmark tool. Used to measure IO performances such as read, write, re-read, re-write, read backwards, read strided, fread, fwrite, random read etc.

Installation: # wget http://www.iozone.org/src/current/iozone3_434.tar # tar -xf iozone3_434.tar # cd ./iozone3_434/src/current # make linux Running Benchmark: # ./iozone -U /mnt/sample -a -f /mnt/sample/testfile -n 4k -g 1024m > with_tcp.iozone # ./iozone -U /mnt/sample -a -f /mnt/sample/testfile -n 4k -g 1024m > with_uds.iozone options: -a Auto mode -f Filename to use for IO -n Set minimum file size (in kBytes) -g Set maximum file size (in kBytes) -U Mount point to remount between tests

Run the iozone for 5 samples each for baseline (with tcp) and target set (with uds) for perfection in results

2.How to visualize the results:

Having the Iozone result sets saved, we will now learn how to process the raw results and try to generate some charts.

About Iozone results comparator:

Iozone-results-comparator is a Python application for analysing results of the IOzone Filesystem Benchmark. It produce output in HTML page with tables and plots.

Dependencies:python-scipy python-matplotlibClone: # git clone https://code.google.com/p/iozone-results-comparator/# cd ./iozone-results-comparator/src # ./iozone_results_comparator.py --baseline ${PATH_TO_TCP_SETS}/with_tcp* --set1 ${PATH_TO_UDS_SETS}/with_uds*# firefox html_out/index.html

You will now able to see the html which contains the plots and tables (also links for tsv’s).

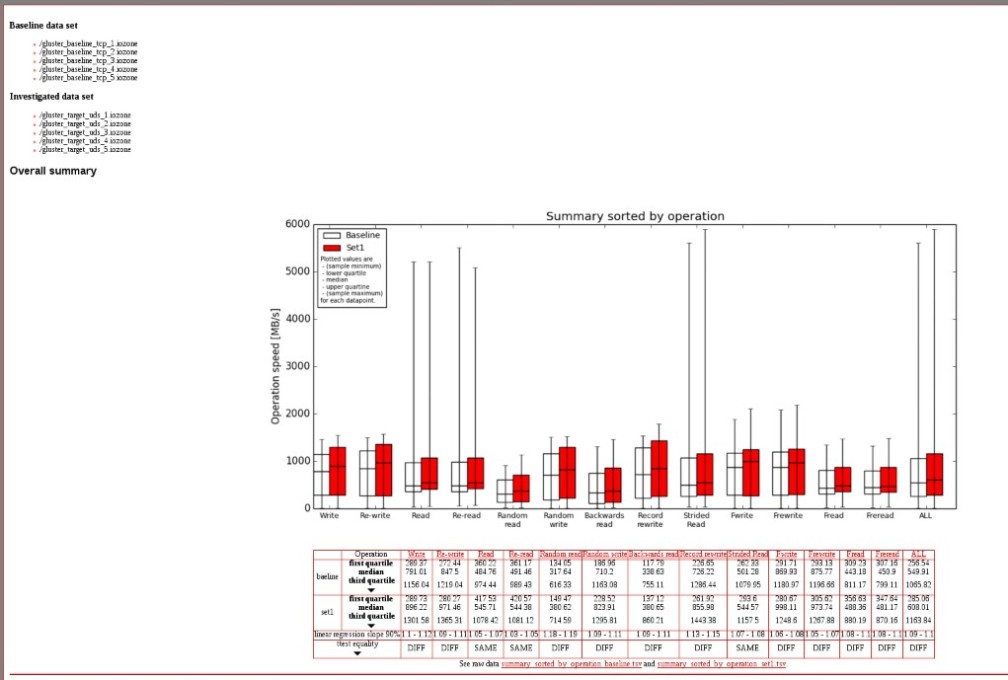

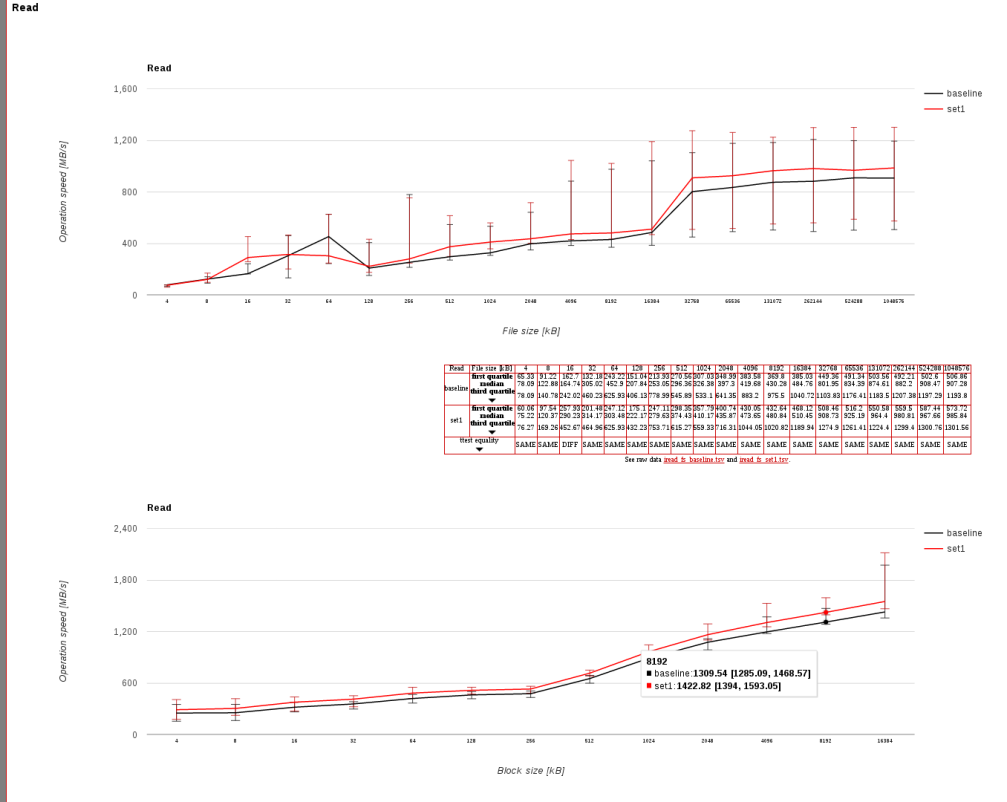

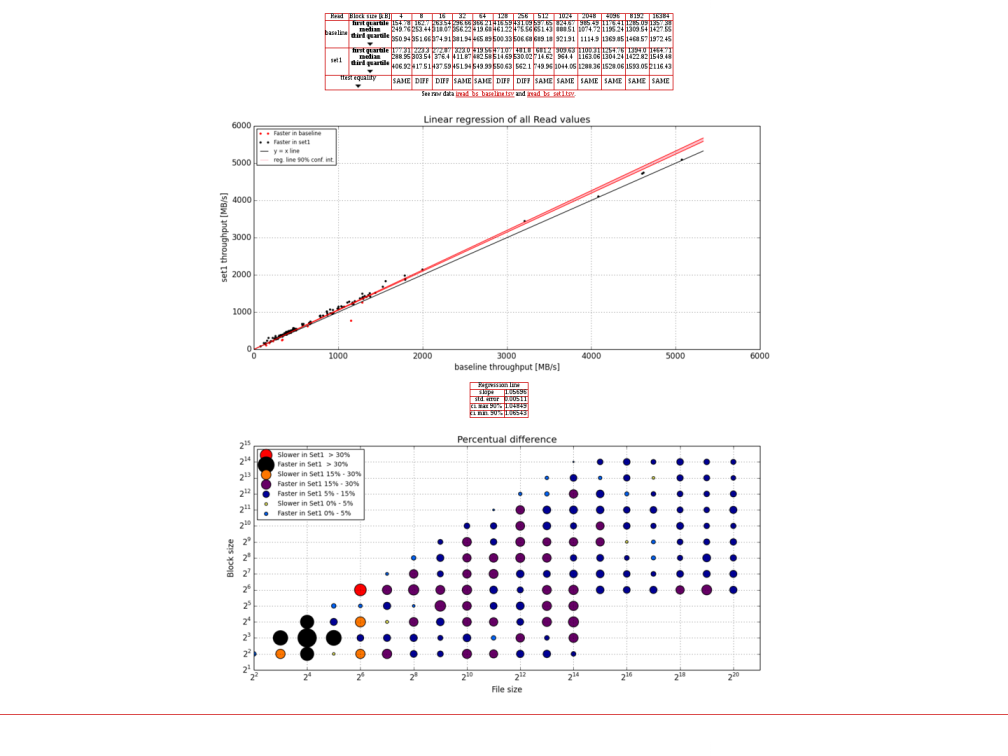

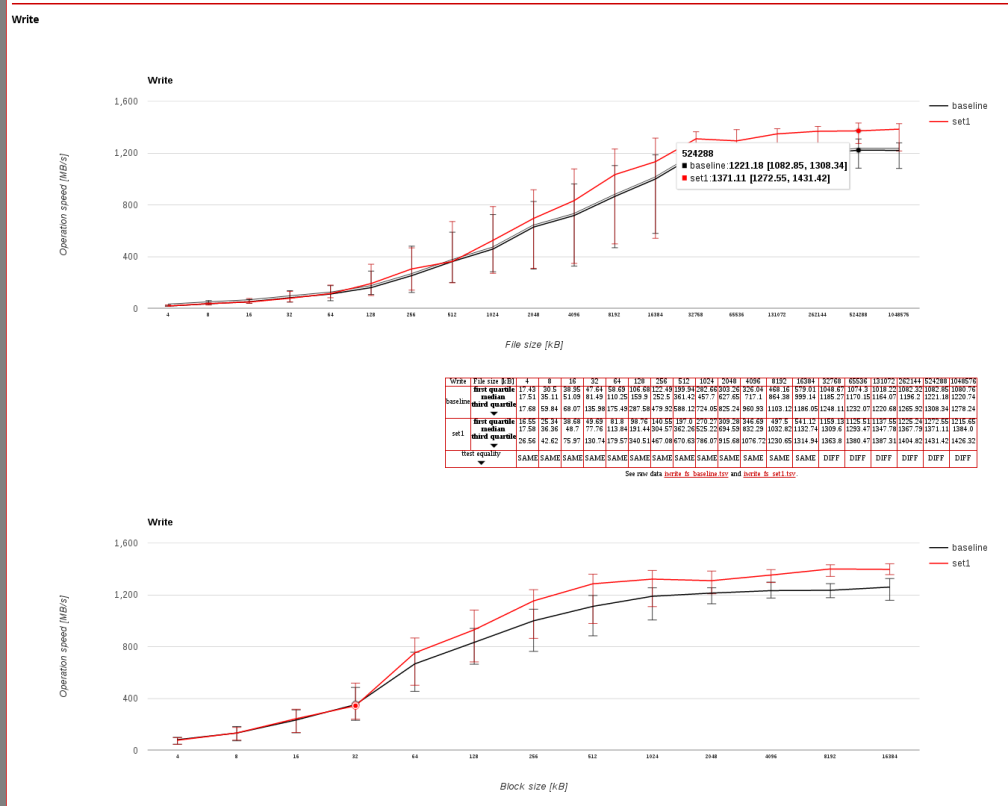

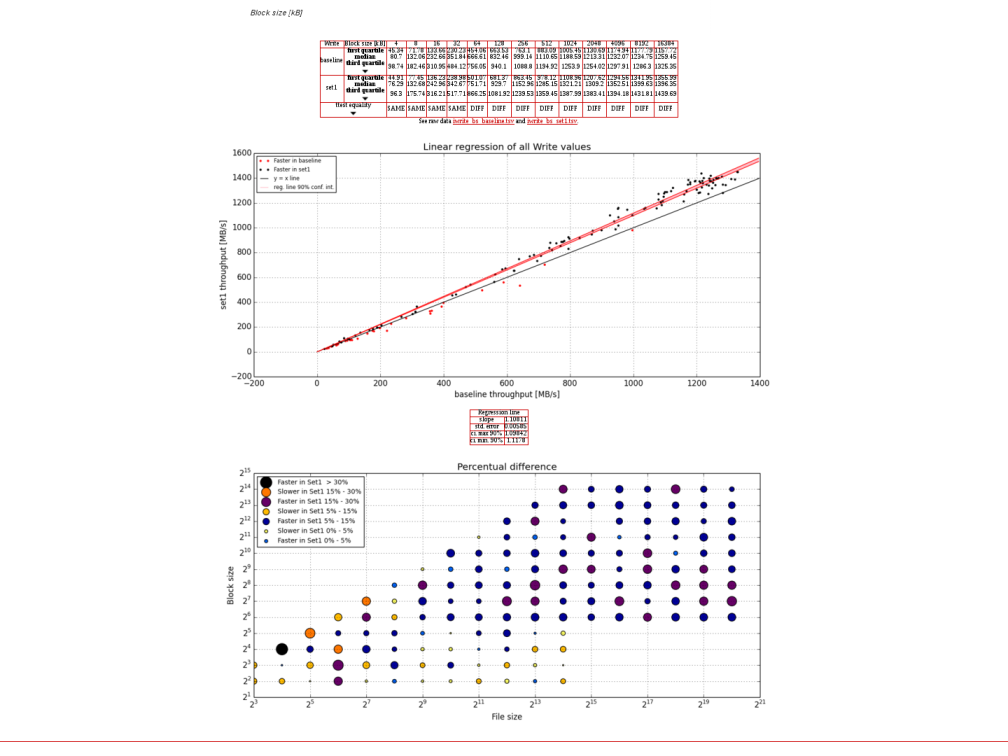

Some sample Results:

Summary

Read Plot

Write Plot

Summary:

The benchmark results says that, communication between brick process and client over Unix domain socket increases overall IO performance.

Using Iozone tool and Iozone results comparator together gives better benchmarking results and visibility.